INFO's Ge Gao is helping develop AI to assist blind professionals interpret nonverbal workplace cues

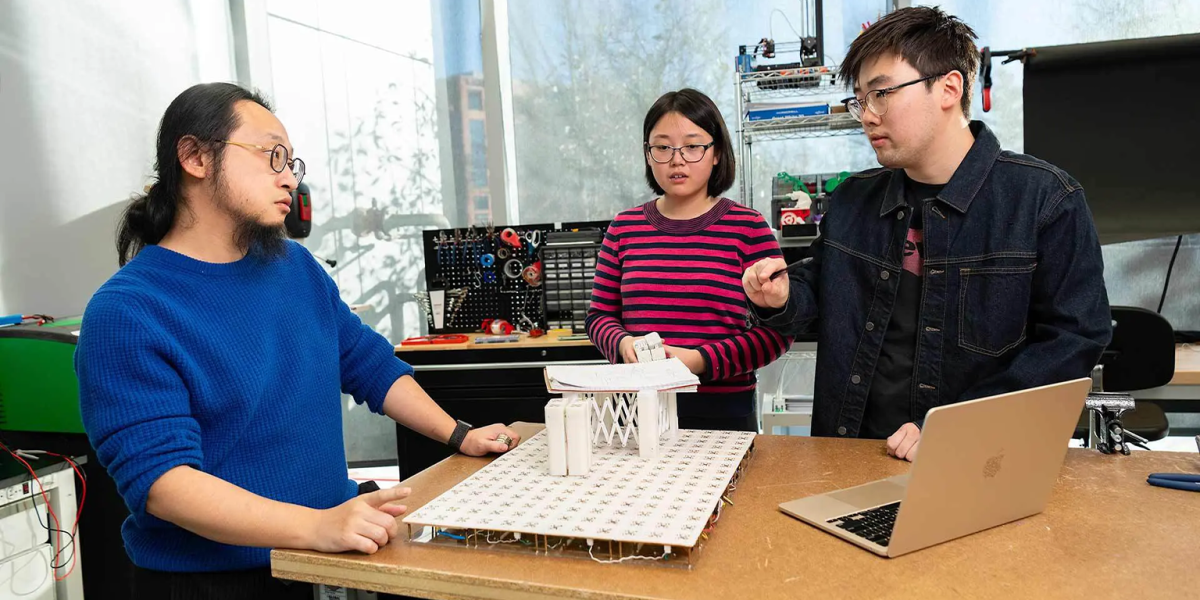

Working in the Small Artifacts (SMART) Lab, Assistant Professor of computer science Huaishu Peng (left) and doctoral students Zining Zhang (center) and Jiasheng Li discuss technology that can assist sight-impaired people. Photo by Mike Morgan Photography. Photo via Maryland Today.

Nonverbal communication like nods, hand gestures and meaningful glances are a part of everyday life and can be crucial for building trust and collaboration at work. But for blind individuals, these subtle visual cues go unnoticed.

Researchers at the University of Maryland and Cornell University could help people with visual impairments experience a fuller range of communication through their development of a wearable artificial intelligence (AI) system that can interpret and convey nonverbal signals.

The project is supported by two seed grants received in 2024 from the Institute for Trustworthy AI in Law & Society (TRAILS) that totaled almost $230,000. It is still in the prototype stage, but the researchers hope to take the technology from their lab space and begin testing it in professional workplace settings within the next 18 months.

“Sight-impaired people continue to play an active role in today’s workforce across sectors and occupations, yet little remains known regarding best practices that can improve their nonverbal interactions with their sighted colleagues,” said Ge Gao, an assistant professor in UMD’s College of Information and a co-principal investigator on the project.

She explains how it could help: In a conversation with a blind colleague, a sighted person instinctively might turn their head and gaze directly at their work partner, seeking acknowledgement of the idea they are talking about. Unable to perceive this silent attempt at interaction, the blind worker is unresponsive, which might lead to uncertainty about the direction of the project.

The AI-infused technology under development at UMD and Cornell will combine tactile (touch-perceived), haptic (motion- or vibration-initiated) and audio feedback to convey nonverbal information in ways that feel natural and unobtrusive.

The devices (which could also be desktop-mounted) might gently vibrate to signal a teammate’s nod or gesture or provide brief audio cues describing important nonverbal actions. AI algorithms will be used to interpret these signals—ranging from vision-based systems that detect gestures and body orientation, to sensor-driven models that track motion and posture. In this case, the device would signal the wearer of the co-worker’s approval-seeking gaze, allowing them to respond.

The TRAILS project is an extension of previous work done by Gao, Huaishu Peng, an assistant professor of computer science at UMD, and Jiasheng Li, a fourth-year computer science doctoral student at UMD advised by Peng.

That project, called Calico, involved a miniature wearable robotic system that moved along the human body on a cloth track, using magnets and sensors to position itself for optimal sensing of various physical activities.

Gao and Peng both have appointments in in the University of Maryland Institute for Advanced Computer Studies, which provides administrative and technical support for many of the TRAILS seed fund projects. They’re collaborating with Malte Jung, an associate professor of information science at Cornell who is also in TRAILS, and who specializes in the design and behavioral aspects of human-robot interaction in group and team settings.

So far, the TRAILS team has surveyed more than 100 blind and sighted participants about how they interpret nonverbal cues in workplace settings, partnering with the National Federation of the Blind (NFB) to broaden their outreach. They are also conducting co-design sessions with both blind and sighted people to directly engage them in shaping AI tools that foster better collaboration.

Peng recently received a 2025 Accessibility Inclusion Fellowship from the NFB—a yearlong award granted to three professors in the state of Maryland annually. The fellowship supports recipients in integrating accessibility principles into their curriculum and incorporating nonvisual teaching methods into at least one of their courses.

The team is creating multimodal datasets and publishing research in fields such as human-computer interaction, human-robot interaction and human-centered AI—all areas of scientific discovery that can benefit future work with blind-sighted teams.

“It’s not just building the technology, it’s also learning from the people involved,” Gao said. “This work will facilitate input from both sides of these interactions, valuing the needs of blind individuals while also addressing their sighted partner’s input.”

The original article was written by Melissa Brachfeld and published by Maryland Today on July 17, 2025.