A UMD developed app that brings your health information to life

In today’s fast-paced world, where smartphones have become our constant companions, we yearn for innovative solutions to unravel the complexities of our personal data. The question arises: How can we not only navigate our data but gain valuable insights from it using the very devices we rely on daily? Enter “Data@Hand,” a groundbreaking mobile app created by a team of brilliant minds, including Young-Ho Kim, Bongshin Lee, Arjun Srinivasan, and Eun Kyoung Choe. This innovative app harnesses the combined power of speech and touch interaction to provide an unprecedented approach to understanding your personal health data.

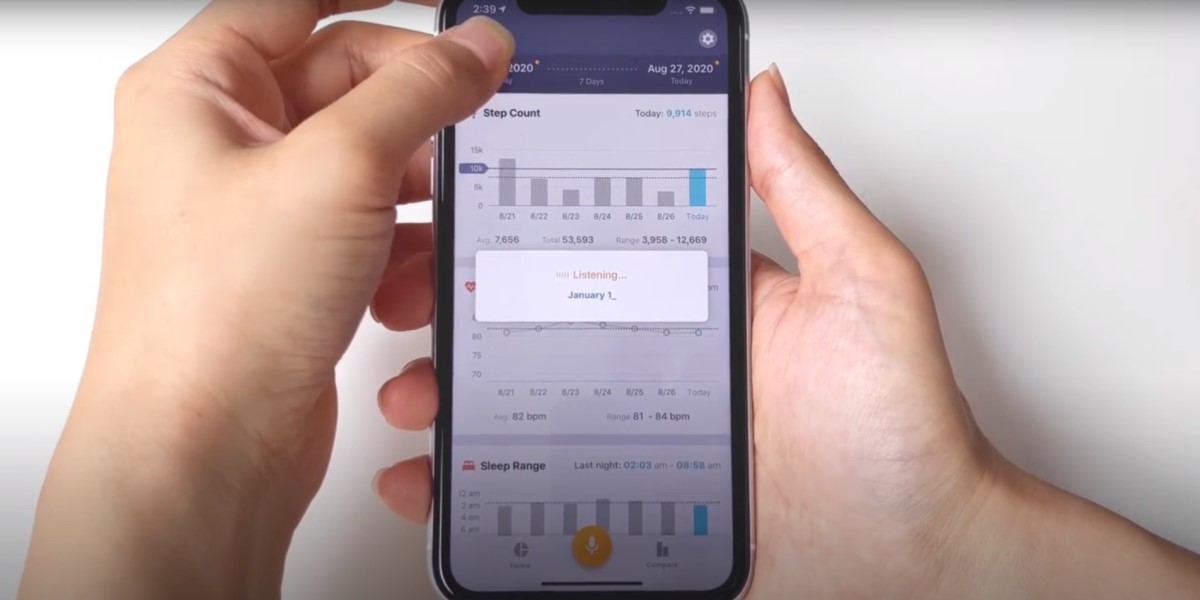

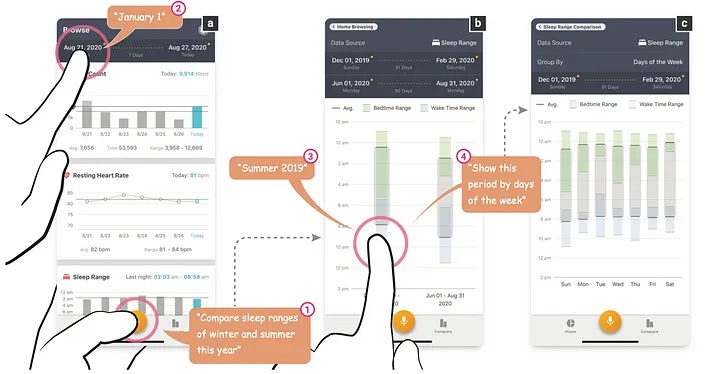

Data@Hand mobile app: supporting multimodal interaction (Image taken from the original paper)

The Mobile Health App Revolution

In the modern era, we have witnessed the proliferation of health and fitness apps, yet their capacity to facilitate deep data exploration often leaves much to be desired. “Data@Hand” is poised to change this by empowering you to effortlessly navigate and compare your personal health data, all within the user-friendly interface of your smartphone. What sets “Data@Hand” apart is its support for three types of interactions — touch-only, speech-only, and touch+speech — which enable flexible time manipulation, temporal comparisons, and data-driven queries.

Let’s dive deeper into these essential functions:

- Seamless Time Manipulation: “Data@Hand” makes time manipulation effortless, allowing you to explore your data with ease. Speech recognition technology, combined with touch interaction, ensures that you can navigate through your data as naturally as having a conversation.

- Temporal Comparisons: Ever wondered how your habits have changed over time? The app provides powerful tools for temporal comparisons, helping you see the bigger picture and subtle trends that may have otherwise gone unnoticed.

- Data-Driven Queries: Unleash the power of your voice with data-driven queries. You can ask questions and issue commands naturally, gaining specific insights and uncovering patterns in your data.

After this app was designed, an exploratory user study was conducted with 13 participants recruited from Reddit, to examine if and how multimodal interaction helps participants explore their own health data. All participants had been using Fitbit wearables for at least six months. The study, held remotely via Zoom video call due to COVID-19, involved participants using the Data@Hand app to explore their Fitbit data. Participants were of varying ages and had smartphones with screen sizes ranging from 4.7 to 6.1 inches. They were given a tutorial and encouraged to use touch, speech, and touch+speech interactions with the app. The study sessions were recorded, and a semi-structured interviews were conducted to gather feedback on their experiences with Data@Hand. The end result was that the participants were successfully able to adopt multimodal interaction (i.e., speech and touch) for convenient and fluid data exploration.

A Catalyst for Personal Insights

“Data@Hand” is not just another mobile app; it’s a powerful catalyst for personal insights. Imagine comparing your activity levels before and after the COVID-19 lockdown, or gaining a deep understanding of your sleep patterns. With features like speech-only and touch+speech interactions, accessing and interpreting this data becomes a breeze.

Here are some other practical ways you can use “Data@Hand” to supercharge your personal data exploration:

- Health Monitoring: Keep track of your fitness journey, monitoring trends and making informed decisions about your well-being.

- Activity Insights: Compare your daily activities over time and make adjustments to achieve your fitness goals.

- Sleep Patterns: Dive into your sleep data to identify patterns, uncovering insights that could lead to better sleep quality.

- Personal Goals: Set and track personal fitness goals, using your historical data as a guide to success.

- Data-Driven Decisions: Make informed decisions about your health and fitness based on your data, rather than guesswork.

Under the Hood: Implementation & Use

Behind the scenes, “Data@Hand” is built on robust technology. It’s implemented in TypeScript upon React Native, ensuring it runs smoothly on both iOS and Android. The app utilizes the Fitbit REST API to download data to a local SQLite database and taps into the power of speech recognition frameworks, including Apple’s speech framework and Microsoft Cognitive Services, to make your voice commands a reality.

“Data@Hand” empowers you to understand your data in ways you never thought possible. By combining the power of speech and touch, it provides a versatile, user-friendly platform for delving into your health data.

So, the next time you reach for your smartphone and wonder about your personal health journey, remember that “Data@Hand” is here to bring your data to life. Explore, understand, and take control of your data like never before. Your health is in your hands — and now, it’s “Data@Hand.”

Reference

- Kim, Y. H., Lee, B., Srinivasan, A., & Choe, E. K. (2021, May). Data@ hand: Fostering visual exploration of personal data on smartphones leveraging speech and touch interaction. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1–17).

Article originally published on October 25, 2023 in VisUMD, the UMD HCIL blog.