Events

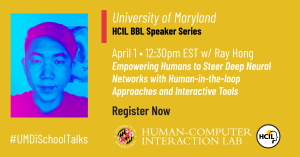

HCIL BBL Speaker Series: Empowering Humans to Steer Deep Neural Networks with Human-in-the-loop Approaches and Interactive Tools

Event Start Date: Thursday, April 1, 2021 - 12:30 pm

Event End Date: Thursday, April 1, 2021 - 1:30 pm

Location: Virtual

When: Every Thurs during the semester from 12:30p – 1:30p ET

Where: For Zoom info, register here!

Speaker: Sungsoo Ray Hong

Abstract.

As the use of machine learning models in product development and data-driven decision-making processes became pervasive in many domains, people’s focus on building a well-performing model has rapidly shifted to understanding how their model works. Recent years have seen an explosion of interest in understanding how Deep Neural Networks (DNNs) work under the hood and more importantly, how we can adjust the way DNNs work based on our knowledge and expectation. However, DNNs’ architecture offers limited transparency, imposing significant challenges in (1) determining when DNNs make unsuccessful predictions with potential bias and more importantly, and (2) improving the model to make the future behavior align with human expectation. In this talk, I will introduce my approach and vision towards establishing an interactive platform that assists data scientists in steering DNNs in a more cost-efficient, effective, and useful way. At the beginning of the talk, I introduce a formative study that aimed at deeply understanding the current practice of data scientists who apply explainable AI tools in designing, building, and deploying machine learning models. Then I introduce my recent approaches focusing on leveraging interactive attention mechanisms towards empowering users to better steering DNNs in the stage of data collection/annotation and model building stages.

Bio.

Ray Hong is an Assistant Professor at the Department of Information Sciences and Technology at George Mason University. He earned his Ph.D. in Human-Centered Design and Engineering at the University of Washington. In Mason, he directs the Alignment lab where members focusing on bridging the gap between humans’ mental models and the way that AI operates by designing novel tools and establishing theories in Human-Computer Interaction (HCI) and Computer-supported Cooperative Work (CSCW). His ultimate mission is to improve the way people interact with and tune AIs to have trustworthy and unbiased insights and decisions. Before joining the University of Washington, he had 5 years of industry experience at Samsung Research where he contributed to commercializing digital products adopted in Samsung’s millions of mobile and home devices.